In this way, we create a for loop that sleeps for 0.05 seconds and then activates the progress bar, extending it by 1 unit (the progress bar was instantiated with a value of 0), repeating this operation 100 times since our progress bar’s range goes from 0 up to 100 units.

This progress bar indicates that the prediction is ongoing until it reaches its maximum value and stops extending.

When the progress bar is completely extended, we can print the diagnosis on the screen, explaining what kind of prediction we are dealing with. For this, we can type the following:

if diagnosis == 0:

st.sidebar.success(“DIAGNOSIS: NO COVID-19”)

else:

st.sidebar.error (“DIAGNOSIS: COVID-19”)

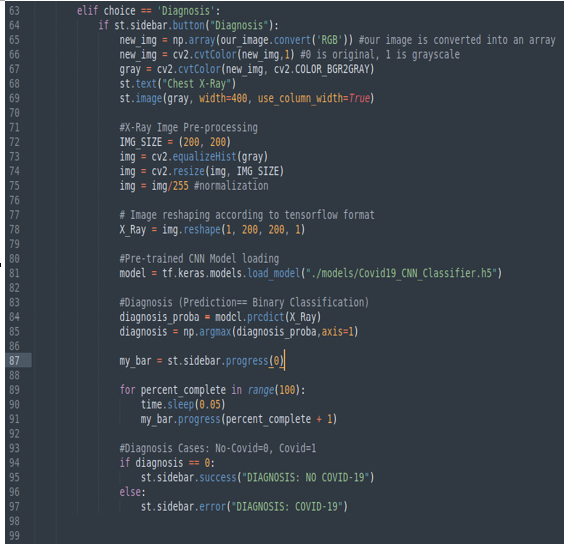

This is the code we added for the frontend part – that is, the part that is visualized in the browser:

Figure 10.8: How we get the prediction and visualize it on the screen

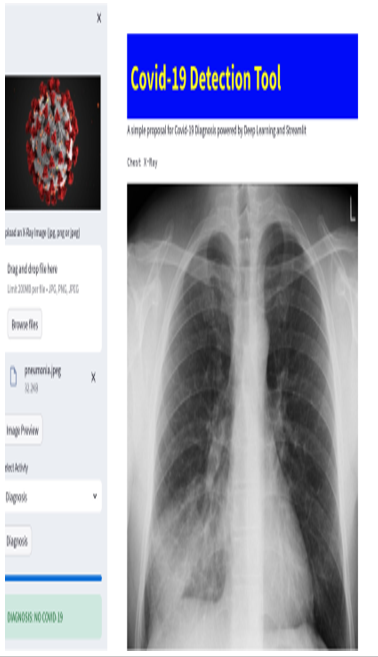

This is the result in the browser in the case of a no Covid prediction:

Figure 10.9: No Covid diagnosis

Since the model we are using to perform the prediction is just a toy model and there is no clinical value in the diagnosis that’s made using it, it’s better to add a final disclaimer to our application.

Let’s add something like this:

st.warning(“This Web App is just a DEMO about Streamlit and Artificial Intelligence and there is no clinical value in its diagnosis!”)

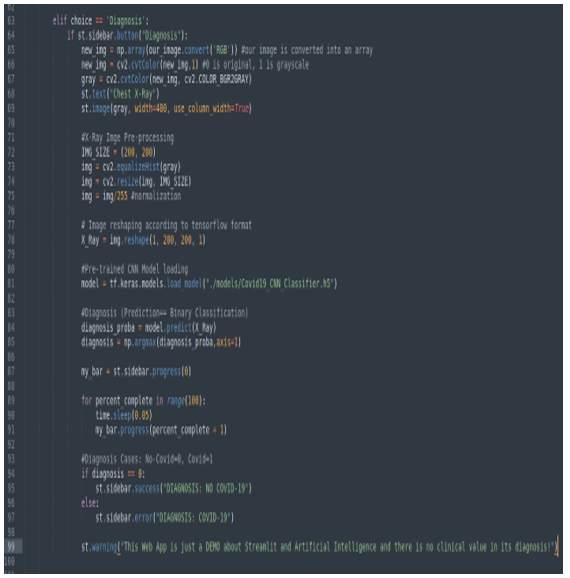

Our final code for the Diagnosis voice of the menu is as follows:

Figure 10.10: Complete code for the “Diagnosis” voice of the menu

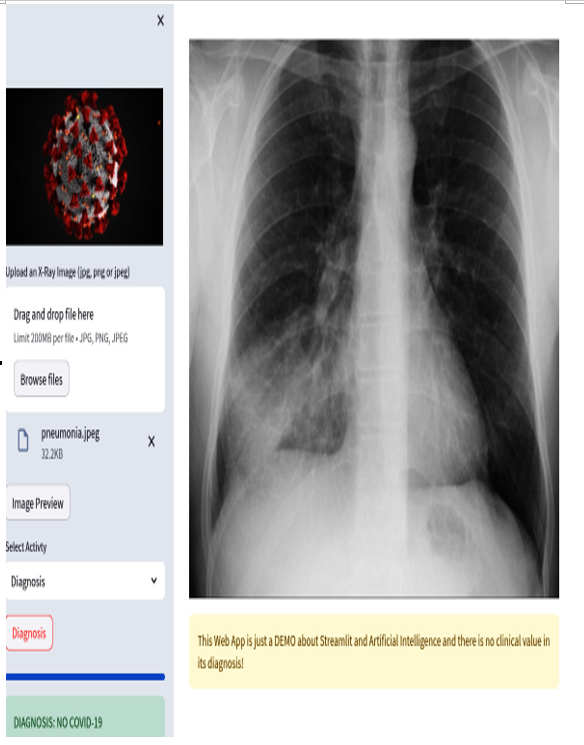

And this is the disclaimer on the browser:

Figure 10.11: Prediction with the disclaimer

Please remember that the point here is not to get a very well-performing model to predict cases of Covid-19 but to understand how to integrate AI models inside Streamlit.

Let’s dive deeper into what we achieved in this chapter.

First of all, in addition to tensorflow, as in the case of our web application, AI models can be trained with other packages such as scikit-learn. Due to this, it’s very important that before loading the model into your Streamlit web application, you carefully read the documentation of the specific package that was used to train the model. Different packages usually adopt different syntaxes to load their models. This means that according to the model you decide to adopt, you must use a different syntax in your code.